Underwater Multi-Robot Convoying Using Visual Tracking by Detection

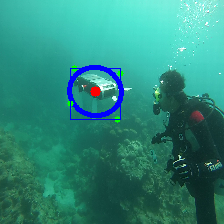

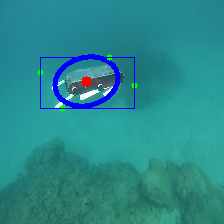

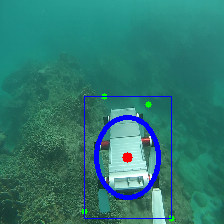

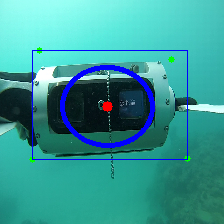

We present a robust multi-robot convoying approach relying on visual detection of the leading agent, thus enabling target following in unstructured 3D environments. Our method is based on the idea of tracking by detection, which interleaves image-based position estimation via temporal filtering with efficient model-based object detection. This approach has the important advantage of mitigating tracking drift (i.e. drifting out of the view of the target), which is a common symptom of model-free trackers and is detrimental to sustaining convoying in practice. To illustrate our solution, we collected extensive footage of an underwater swimming robot in ocean settings, and hand-annotated its location in each frame. Based on this dataset, we present an empirical comparison of multiple tracker variants, including the use of several Convolutional Neural Networks both with and without recurrent connections, as well as frequency-based model-free trackers. We also demonstrate the practicality of this tracking-by-detection strategy in real-world scenarios, by successfully controlling a legged underwater robot in five degrees of freedom to follow another robot's arbitrary motion.

Training, Validation and Test Datasets

We collected a dataset of videos of the Aqua family of hexapods during field trials at McGill University's Bellairs Research Institute in Barbados. This dataset includes approximately 5.2K third-person view images extracted from video footage of the robots swimming in various underwater environments, recorded from the diver's point of view and nearly 10K first-person point of view frames extracted from the tracking robot's camera front-facing camera. We use the third-person video footage for training and validation, and the first-person video footage as a test set. This separation also highlights the way we envision our system to become widely applicable: the training data can be recorded with a handheld unit without necessitating footage from the robot's camera.

Code and Data

You can find the bounding box annotated Aqua dataset here. We will make this available shortly. If you need it sooner feel free to contact the authors. Our extension of YOLO with recurrent connections can be found here. Finally, our modifications of the VGG16 model will be posted here soon.